Currently Empty: ₹0.00

Containers

Docker – Unlocking the Power of Containers

Introduction:

As a software professional, you’re no stranger to the challenges of working with different environments, dependencies, and tools. Whether you’re developing locally, running tests, or deploying apps in production, managing consistent environments can often feel like a headache. That’s where Docker comes in.

Docker has transformed the way developers, DevOps engineers, and system administrators work. With its container-based approach, Docker allows you to package applications with all their dependencies and configurations, making them easily portable, scalable, and isolated.

If you’re a hands-on professional looking to get started with Docker, this blog is for you. We’ll cover what Docker is, how it works, and how to get hands-on with Docker to make your development process smoother, faster, and more efficient.

What is Docker?

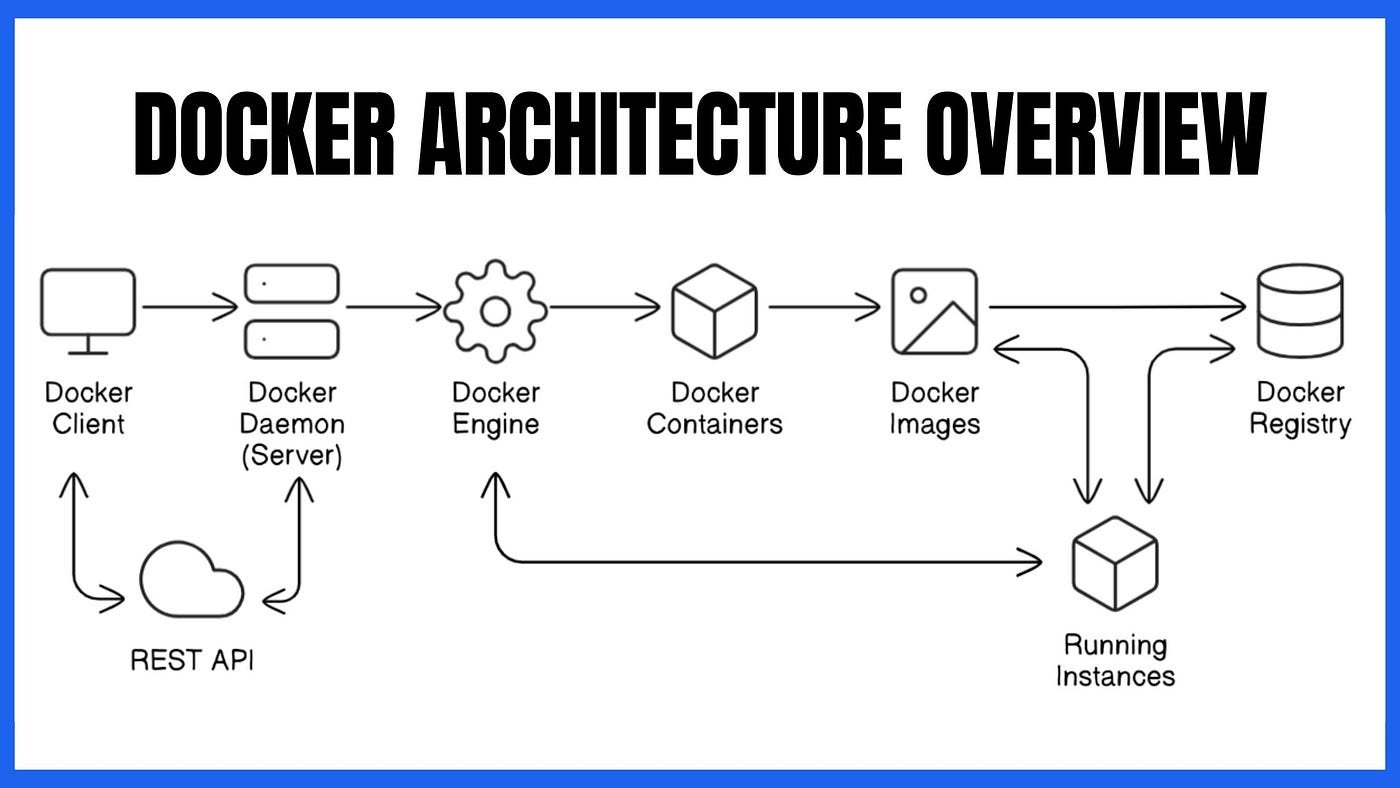

At its core, Docker is a platform for developing, shipping, and running applications in containers. A container is an isolated environment that bundles an application’s code, dependencies, libraries, and configuration, ensuring that it runs consistently across any system.

Think of Docker as the solution to the common problems of “it works on my machine” and “dependency conflicts”. By packaging your application and all its requirements into a single container, Docker ensures it runs in the same way on your local machine, testing environments, or production servers.

Key Docker Concepts to Know:

-

Image: A Docker image is a read-only template that defines everything needed to run a container — from the base operating system to your application code and dependencies.

-

Container: A container is a running instance of an image. Containers are lightweight, portable, and isolated from each other.

-

Dockerfile: A Dockerfile is a script that contains instructions to build a Docker image. It automates the process of setting up your environment.

-

Docker Hub: The central repository where you can find pre-built Docker images for various applications.

Why Docker is Essential for Software Professionals

1. Streamlined Development Workflows

Docker makes it incredibly easy to set up consistent development environments. No more spending hours configuring your environment or worrying about dependency versions — Docker lets you create reusable environments with minimal effort.

2. Isolated Environments

Docker containers provide complete isolation, so you can run multiple applications with conflicting dependencies on the same system without any issues.

3. Portability Across Systems

With Docker, you can run your application on your local machine, test it on staging, and deploy it to production, knowing it will work exactly the same everywhere.

4. Easy Scaling

When your application grows, Docker’s lightweight containers can be spun up quickly, allowing you to scale your application seamlessly. Combined with orchestration tools like Kubernetes, Docker enables efficient horizontal scaling.

Container Technology: A Brief History and Background

Containers have been around for many years, but they’ve gained significant popularity recently due to advancements in technology and the rise of cloud-native applications. While Docker is one of the most well-known container platforms today, containers themselves are built on foundational technologies that date back to the early days of Linux.

The Birth of Containers

Containers have their roots in UNIX chroot (change root), a system call that allows a process to run in an isolated directory, essentially creating a contained environment. However, this was limited in scope and didn’t provide full isolation.

In the mid-2000s, Linux introduced Linux Containers (LXC), a more sophisticated approach to process isolation that allowed multiple virtual environments to run on the same physical machine, sharing the same Linux kernel while being completely isolated from one another. This laid the groundwork for modern containerization.

The Rise of Docker

Docker was born out of the need for a more user-friendly and efficient container system. It was developed in 2013 by Solomon Hykes and his team at dotCloud, a platform-as-a-service (PaaS) company. Docker leveraged LXC to allow for the creation of portable, lightweight containers that could be easily shared, run, and deployed.

What set Docker apart was its ability to simplify containerization, making it easy to package applications, manage dependencies, and ensure consistency across environments. Docker’s major innovations include:

-

Docker Images: A containerized snapshot of an application and its dependencies.

-

Dockerfile: A simple, human-readable way to automate the building of containers.

-

Docker Hub: A global repository for container images that allows developers to share and discover containers.

Since its release, Docker has exploded in popularity, becoming the de facto standard for containerization. It has redefined software deployment by enabling microservices, DevOps practices, and cloud-native architectures.

Linux Kernel Features Enabling Containerization

At the heart of Docker and modern container technologies is the Linux kernel. Docker, and containers in general, leverage several key features of the Linux kernel that allow for lightweight isolation and process management. These features include:

1. Namespaces

Namespaces are a fundamental building block of containerization. They isolate the container’s environment from the host system and other containers. The most commonly used namespaces are:

-

PID Namespace: Isolates process IDs, so processes inside a container are unaware of processes outside it.

-

Network Namespace: Provides containers with their own network stack, including IP addresses, network interfaces, and routing tables.

-

Mount Namespace: Isolates the filesystem, allowing containers to have their own view of the filesystem, independent of the host.

-

UTS Namespace: Isolates the hostname and domain name of the container.

-

IPC Namespace: Isolates inter-process communication resources (semaphores, message queues, shared memory).

-

User Namespace: Provides user and group ID isolation, allowing containers to have their own user mapping.

2. Control Groups (cgroups)

Cgroups (Control Groups) are another critical feature that allows containers to share resources (like CPU, memory, and I/O) while ensuring that they don’t use more than their allocated share. Cgroups enable:

-

Resource Limiting: Containers can be limited to specific CPU and memory usage.

-

Resource Accounting: Track how much CPU or memory each container is consuming.

-

Prioritization: Set priorities for containers, ensuring important containers get more resources.

3. Union File Systems (UnionFS)

A Union File System is a file system that allows multiple layers to be stacked on top of one another. Docker uses OverlayFS (a type of UnionFS) to build images with multiple layers, allowing:

-

Efficient Storage: Only changes made to the base image are stored, reducing storage requirements.

-

Image Reusability: Docker images are built from reusable layers, making them faster to create and deploy.

These Linux kernel features combined provide the lightweight, fast, and isolated environment that Docker containers are known for.

Docker History and Evolution

-

2013: Docker is born as an open-source project by Solomon Hykes and the team at dotCloud. It leverages LXC and quickly gains traction for simplifying application deployment.

-

2014: Docker 1.0 is released, and the company behind Docker, Docker, Inc., is founded. Docker begins to evolve into a full container ecosystem with support for orchestration, scaling, and networking.

-

2015: Docker introduces Docker Swarm for container orchestration, enabling users to manage clusters of Docker containers. The same year, Docker Compose and Docker Machine are released, further enhancing Docker’s utility for developers.

-

2017: Docker introduces Docker Enterprise Edition (EE) to cater to large enterprises with security and management features. The Docker ecosystem begins to shift toward Kubernetes as the primary container orchestration platform.

-

2020: Docker focuses more on simplifying container development workflows and integrates with modern CI/CD tools, cloud platforms, and serverless architectures.

Variants of Docker: Docker CE vs. Docker EE

Docker has two main variants tailored to different use cases:

1. Docker CE (Community Edition)

-

For developers and hobbyists.

-

Open-source, free, and designed to work in individual or small-scale environments.

-

It includes basic Docker tools, such as the Docker CLI, Docker Compose, and Docker Engine.

-

Docker CE is ideal for those who want to experiment with Docker, learn about containers, or deploy small applications.

2. Docker EE (Enterprise Edition)

-

For large enterprises and teams.

-

Paid version with enhanced security, support, and features for managing Docker in production environments.

-

Docker EE includes Docker Swarm, Docker Content Trust, and role-based access control (RBAC) for securing the environment.

-

Offers integrations with Kubernetes and other enterprise-grade tools.

Getting Hands-On with Docker

Installing docker community edition in Ubuntu

# Add Docker's official GPG key:

sudo apt-get update

sudo apt-get install ca-certificates curl

sudo install -m 0755 -d /etc/apt/keyrings

sudo curl -fsSL https://download.docker.com/linux/ubuntu/gpg -o /etc/apt/keyrings/docker.asc

sudo chmod a+r /etc/apt/keyrings/docker.asc

# Add the repository to Apt sources:

echo \

"deb [arch=$(dpkg --print-architecture) signed-by=/etc/apt/keyrings/docker.asc] https://download.docker.com/linux/ubuntu \

$(. /etc/os-release && echo "${UBUNTU_CODENAME:-$VERSION_CODENAME}") stable" | \

sudo tee /etc/apt/sources.list.d/docker.list > /dev/null

sudo apt-get update

sudo apt-get install docker-ce docker-ce-cli containerd.io docker-buildx-plugin docker-compose-plugin

sudo usermod -aG docker $(USER)

sudo su $(USER)

docker --version

docker images

Now that you understand the background, history, and technology behind Docker, let’s continue with a practical guide to using Docker in your development environment.

Downloading docker image from Docker Hub (Remote Docker Registry)

docker pull ubuntu:latest

Listing docker images from Local Docker Registry

docker images

Deleting a docker image from Local Docker Registry

docker pull hello-world:latest docker images docker rmi hello-world:latest docker images

Create a container and start running in the background(daemon mode)

docker run -it --name ubuntu1 --hostname ubuntu1 ubuntu:latest /bin/bash

Listing the running containers

docker ps

Listing all containers irrespective of their running state

docker ps -a

Stop a running container

docker ps docker stop <replace-this-with-your-container-name> docker ps -a

Start the exited containers

docker start <replace-this-with-your-container-name> docker ps

Stopping multiple containers without calling out their names

docker stop $(docker ps -q)

Starting multiple containers without calling out their names

docker start $(docker ps -aq)

Conclusion

Docker is a powerful tool that simplifies the development, deployment, and scaling of applications. By containerizing your apps, you can eliminate environment issues, speed up development cycles, and ensure that your code runs consistently, whether it’s on your machine or in the cloud.

As a hands-on software professional, Docker can help you work faster, smarter, and more efficiently. Start using Docker today and revolutionize your development workflow!